How To Define Max_queue_size, Workers And Use_multiprocessing In Keras Fit_generator()?

Solution 1:

Q_0:

Question: Does this refer to how many batches are prepared on CPU? How is it related to workers? How to define it optimally?

From the link you posted, you can learn that your CPU keeps creating batches until the queue is at the maximum queue size or reaches the stop. You want to have batches ready for your GPU to "take" so that the GPU doesn't have to wait for the CPU. An ideal value for the queue size would be to make it large enough that your GPU is always running near the maximum and never has to wait for the CPU to prepare new batches.

Q_1:

Question: How do I find out how many batches my CPU can/should generate in parallel?

If you see that your GPU is idling and waiting for batches, try to increase the amount of workers and perhaps also the queue size.

Q_2:

Do I have to set this parameter to true if I change workers? Does it relate to CPU usage?

Here is a practical analysis of what happens when you set it to True or False. Here is a recommendation to set it to False to prevent freezing (in my setup True works fine without freezing). Perhaps someone else can increase our understanding of the topic.

In summary:

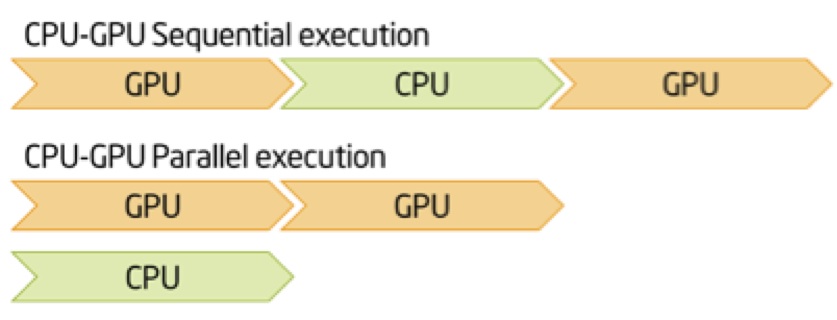

Try not to have a sequential setup, try to enable the CPU to provide enough data for the GPU.

Also: You could (should?) create several questions the next time, so that it is easier to answer them.

Post a Comment for "How To Define Max_queue_size, Workers And Use_multiprocessing In Keras Fit_generator()?"